Report on How Google has streamlined Postmortem analysis

I wanted to examine how to construct an incident report, also known as a postmortem, in this episode. Instead of presenting you with anything I made, I think this Google Incident Report from early 2013 is an excellent example.

Before we get started, let me clarify that I have no affiliation with Google in any way. I am just impressed with how they handled the incident, and I believe their report should serve as a model for others to follow. The episode notes below contain a link to the Incident Report.

As IT professionals, we are all aware that even with the greatest of intentions and careful planning, things can occasionally go wrong. You may be requested to prepare an Incident Report, which can be shared with top executives, other employees, or even customers, when anything goes very wrong. Whether or not anyone reads these, I still advise you to go through this process since it can be a useful guide. You will be able to analyse your surroundings when things go wrong and develop strategies to avoid such mistakes in the future.

I was moved to read Google's incident report regarding an outage of their API service since it appeared to address all of my concerns and provided reassurance that they were a competent company. While we won't be reading the complete report, let's take a closer look at its format and some of the topics it touches on.

The construction is surprisingly straightforward, despite its strength. The report is divided into five sections: an overview of the problem, a timeline, an analysis of the root cause, a resolution and recovery plan, and finally, corrective and preventive actions. Let's take a closer look at each of these sections.

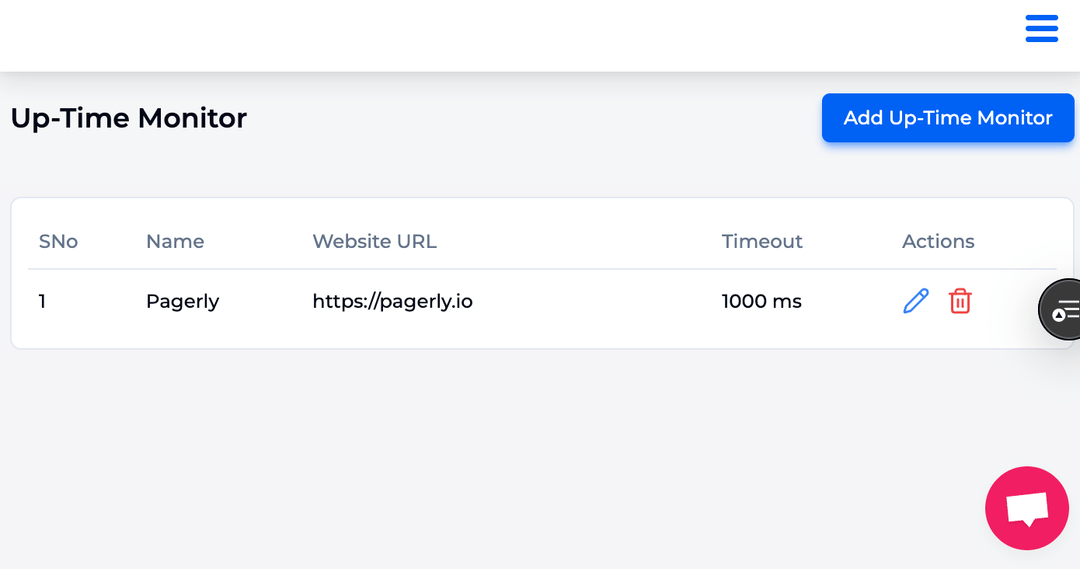

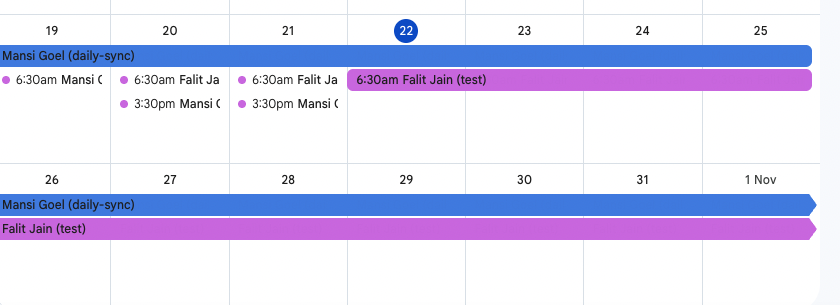

The fact that Google has numerous internal systems and procedural machinery operating in the background is also highlighted in this incident report. These, in my opinion, are best practices for every business. For instance, we can tell that they have automated service monitoring and alerting capabilities because they have a record of the start of the outage and the pager alarm that was sent to the team. In addition, they include change management capabilities, which allow them to monitor who made what modifications and eventually attempt to undo them. This is crucial, in my opinion; without this visibility into modifications, it will take a long time to identify the initial cause of the problem, never mind attempting to reverse it. Additionally, they did not sugarcoat the reality that testing was omitted and the configuration push was not the safest.

Therefore, I strongly advise looking at Google's Incident Report, which is mentioned in the episode notes below, if you ever find yourself in a position where you must write an Incident Report. It would be advisable to consider the possibility of replicating their internal systems and procedural machinery within your own setting.